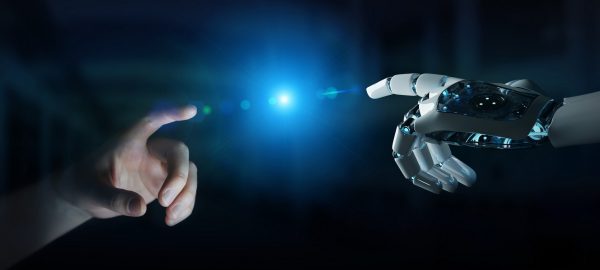

Effective robotic manipulation requires adaptability to varied objects and dynamic settings. Traditional rigid hands, while accurate, face limitations in cost, fragility, and control complexity. Underactuated, compliant hands offer a simpler, more adaptable alternative but introduce uncertainties in hand-object interactions. This research addresses these challenges by equipping adaptive hands with advanced perception and learning algorithms. We developed tactile sensing for robust pose estimation in occluded environments and introduced AllSight, a high-resolution, 3D-printed tactile sensor that bridges the sim-to-real gap with zero-shot learning. Further, we applied reinforcement learning and haptic glances to improve insertion accuracy under spatial uncertainties. Expanding on this, we explored visuotactile perception to handle the specific demands of contact-rich tasks like insertion.

Learning in-hand perception and manipulation with adaptive robotic hands

Are you interested in learning the profession of the future?

Faculty of Mechanical Engineering, Technion - Israel Institute of Technology, Haifa

"*" indicates required fields