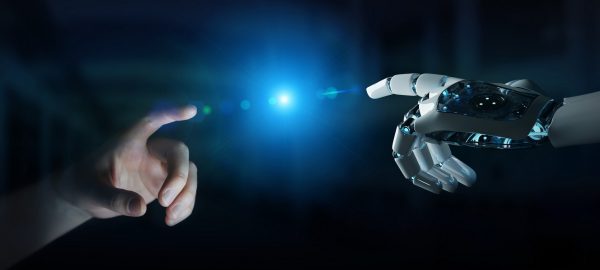

The statistical supervised learning framework assumes an input-output set with a joint probability distribution that is reliably represented by the training dataset. The learner is then required to output a prediction rule learned from the training dataset. Unfortunately, most supervised learning-based methods are highly demanding in terms of computational resources and training data (sample complexity). Moreover, trained models are sensitive to domain changes, such as varying acquisition systems, signal sampling rates, resolution and contrast.

In this talk, I will try to answer a fundamental question: Can supervised learning models generalize well solely by learning from a single training example? To this end, I focus on an efficient patch-based learning framework that requires only a single image input-output pair for training. The experimental results demonstrate the applicability, robustness and computational efficiency of the proposed approach for image restoration tasks across different fields: seismic imaging, medical image denoising, and low-level vision tasks.