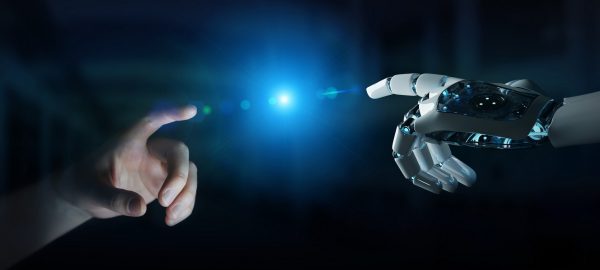

Robotic systems are increasingly used in high-stakes domains that demand precision and safety, with surgical robotics serving as a prominent example. In surgical robotics, technical proficiency is crucial to patient outcomes; however, surgeons’ training still relies on human instructors, which makes it costly, time-consuming, and challenging to scale. My vision is to address this gap by developing intelligent robotic systems that can automatically deliver adaptive feedback comparable to that of a human trainer.

In my doctoral research, I created quantitative methods for evaluating surgical performance, including novel orientation-based skill metrics and movement analysis approaches inspired by computational motor control. I designed and tested training protocols that applied force and motion perturbations to improve surgical skill robustness. I also led a longitudinal study with surgical residents, which produced a unique dataset on their long-term learning processes and the effects of fatigue on performance.

In my postdoctoral work, I built on these foundations to enable the future development of intelligent feedback systems for robotics. To improve surgical robots’ awareness of their operators, I integrated multi modal sensing, including tool vibration measurements and eye tracking, into robotic platforms and launched projects using artificial intelligence to enable autonomous feedback.

In this talk, I will present selected projects to demonstrate how modelling human movement, designing targeted interventions, and integrating advanced sensing with robotic control can advance skill development and human‑robot collaboration. I will conclude with future directions toward robotic systems capable of adaptive, multi-modal feedback, with applications spanning surgical robotics and other domains where humans and robots work together to achieve demanding tasks.